Can we use natural sounds, and in particular speech signals, to estimate hearing thresholds based on ear-EEG?

As one of the most important senses, the ability to hear enables us to communicate with the outside world in a way that none of our other senses can achieve. Hearing loss can significantly impact a person’s quality of life. Individuals with untreated hearing loss are more likely to develop depression, anxiety, and feelings of inadequacy. They may also avoid or withdraw from social situations. In children, hearing loss can negatively impact speech and language acquisition, social and emotional development.

People suffering from hearing loss can benefit from the use of hearing aids that can make sound audible for hearing-impaired persons. To work properly, it is crucial that the hearing aids are fitted in close accordance with the hearing abilities of the individual hearing aid’s user. In some cases, hearing can deteriorate relatively quickly, especially with increasing age. Thus, it is important to re-fit the hearing aid recurrently to maintain the ability to communicate and carry out day-to-day activities.

Traditionally, hearing aid fitting is carried out in the clinic, where different behavioral tests such as hearing thresholds estimation based on pure tone stimuli and speech discrimination testing are used to characterize the hearing loss. Alternatively, hearing loss can be characterized based on electrophysiological measures. This is typically based on the auditory steady-state responses (ASSR) recorded from a few electroencephalography (EEG) channels placed on the scalp of the test person.

Ear-EEG is a novel EEG recording method in which EEG signals are recorded from electrodes located on an earpiece placed in the ear. Ear-EEG can potentially enable integration of EEG recording into hearing aids. This could provide an objective tool for individualized refitting of the hearing aid without the need for visiting the clinic.

Previous investigations have shown that ASSR reliably can be measured using the ear-EEG method and ear-EEG ASSR recordings can be used to estimate hearing threshold levels for both normal and hearing-impaired subjects.

Traditionally, ASSR based hearing threshold estimation has been performed using amplitude modulated continuous pure tones or noise, or by presentation of discrete stimuli such as chirps with a constant repetition rate. Monotonous stimuli of this kind are unenjoyable and uninteresting for the user and as a result they make the user tired and unmotivated.

Natural sounds such as speech is much more pleasant to listen to, thus speech-based hearing tests are more appealing to say yes to than the conventional electrophysiological hearing tests based on the synthetic and monotone stimuli. Users are more willing to perform a hearing test if it can fit into the daily activities they already have. Therefore, a speech-based hearing test would be more convenient and usable.

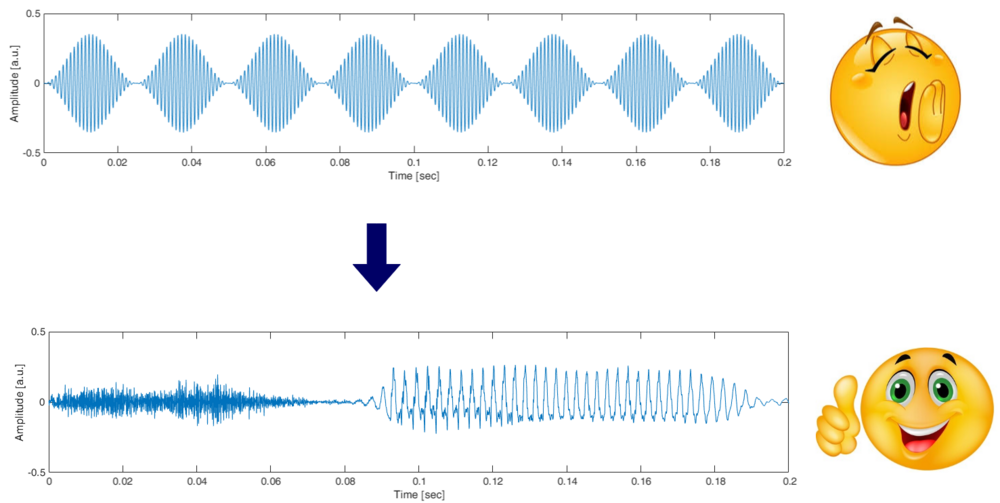

The aim of this project is to investigate the possibilities of using the natural sounds, and in particular speech signals, to estimate hearing thresholds based on the ear-EEG. We are going to move from the monotone stimuli used in conventional ASSR hearing threshold estimation to stimuli that are perceived as natural sounds such as speech (Figure 1).

Figure 1. Top: Amplitude modulated pure tone signal. Bottom: Speech signal.

Hearing threshold estimation using a speech signal has a great potential for implementation in hearing aids, allowing the hearing aid to refit the audio processing accordingly. Hearing thresholds estimation could be performed in daily life while the user of the hearing aid, for instance, is listening to a favorite audio book. Moreover, it is more pleasant to listen to the speech than to some monotone stimuli, especially when the recording must carry out for a long period of time. Finally, validation of the hearing loss in daily life settings will save time and resources and reduces the number of visitations to the clinic.

The project is funded by the William Demant Foundation, T&W Engineering and GSTS.