We are currently offering Master's, Bachelor's, and R&D engineering projects with wireless communications, networking and data analytics.

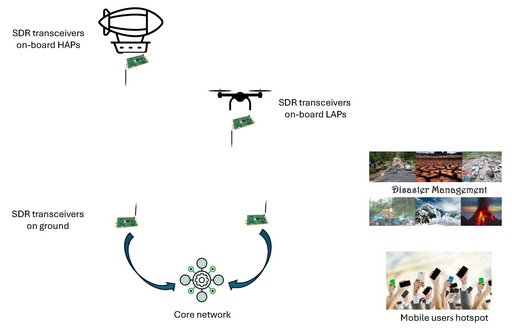

The main objective of the project is to create a mobile and centralized communication network that can be used in different applications. The current 4G LTE, and 5G is comprised of mainly fixed remote radio heads (RRHs) to collect and send information to mobile users through transceivers. However, there are many applications and instances when we require mobile RRHs. For example, providing coverage to a sudden mobile users hotspot, and disaster management.

The objective of the project is to create a mobile RRH on-board unmanned high-altitude platforms (HAPs) and low altitude platforms (LAPs). The mobile RRH on-board unmanned HAPs and LAPs will be able to cognitively maneuver to optimum locations using reinforcement learning. The additional height and mobility will be the key players for optimum coverage on demand. Multiple RRHs will be connected to the base band units that are connected to the centralized core network as shown in the figure below. SDRs will be used as transceivers on the HAPs/LAPs and on the ground.

It is expected that student/s will learn the basics of real time communications using SDRs and possible challenges in the implementation and optimization. Another learning outcome is cognitive manoeuvring of unmanned aerial vehicles using reinforcement learning keeping in view the optimum coverage.

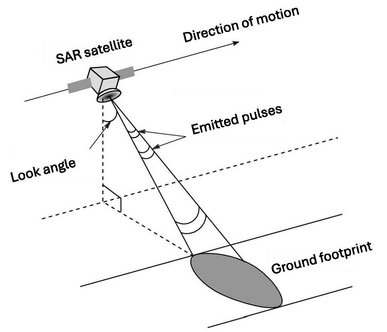

There are many locations in Denmark that are covered by cloud mostly throughout the year. Therefore, visible and infrared sensing cannot provide accurate condition of the earth surface. Using synthetic aperture that can penetrate through clouds can provide an accurate picture of earth surface. Accurate ground conditions can help many sectors including agriculture and livestock.

The objective of the project is to process the synthetic aperture radars (SAR) data of earth surface obtained from a satellite. The data is processed using sparse and SAR signal processing algorithms. Deep neural networks is used to improve classification and forecasting.

Sparse synthetic aperture radar data processing obtained through satellites from different earth locations. The data is processed using sparse signal processing and artificial intelligence.

Learning outcomes include understand the sparse signal processing for SAR, understanding the impairments due to the satellite channel, obtain a high resolution image of the earth surface, and classification and forecasting using deep neural networks.

The main objective of the project is to create a mobile and centralized communication network that can be used in different applications. The current 4G LTE, and 5G is comprised of mainly fixed remote radio heads (RRHs) to collect and send information to mobile users through transceivers. However, there are many applications and instances when we require mobile RRHs. For example, providing coverage to a sudden mobile users hotspot, and disaster management.

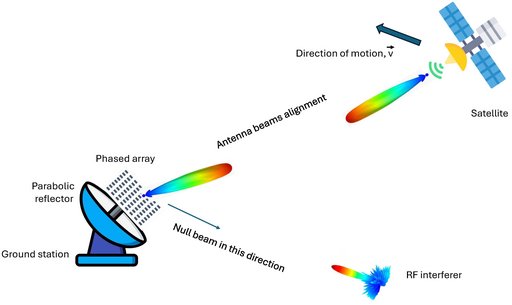

The low earth orbit (LEO) satellites move at a very velocity (7.8 km/s) and the link distance is in hundreds of kilometers. Therefore, the RF link between the satellite and ground station should be aligned. The objective of the project is to create a high gain antenna beam that can be steered in space to remain aligned with the fast-moving low-earth orbit. In addition, cognitive beamforming (through reinforcement learning) and steering can be used to supress the RF interferers.

The learning outcomes include optimum phased array antenna design with associated beam forming and steering that can be controlled cognitively through reinforcement learning. Moreover, the student/s will learn how alignment affects satellite communications. In particular, a good antenna beam alignment and interference suppression can help to achieve high throughput.

The students are expected to work on HFSS software, and PCB antenna manufacturing and other RF equipment for beam forming and steering.

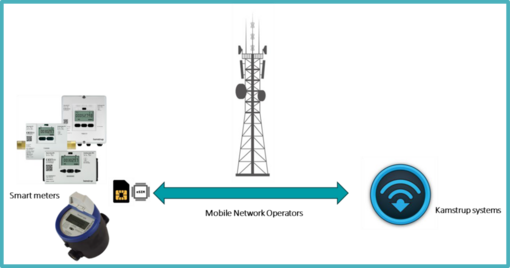

Smart access tech algorithms for efficient connectivity in 3GPP radio environments. Kamstrup develops metering systems and deploys communication infrastructure for remote data retrieval from numerous smart meter installations, often battery-driven. To ensure a 15+ year battery life, data retrieval methods must be carefully designed. As the number of connected devices grows, many will use 3GPP cellular IoT LPWAN tech like LTE-M and NB-IoT. Understanding power-saving features and access tech capabilities is crucial for battery applications. This study focuses on LTE-M and NB-IoT, aiming to develop an algorithm using local data sources to optimize connectivity while minimizing power consumption. Kamstrup can provide radio performance data for algorithm design and hardware for proof of concepts.

| Beam Steering and Beamforming Algorithms for Nanosatellite Communications

The goal of this project is to develop beam steering and beamforming algorithms for nanosatellites. A depiction of beam steering is shown in the figure, where by controlling the phases of the feeding signals to the antennas, the direction of the whole radiation pattern can be electronically controlled. This is achieved by providing an input steering angle to the controller C in the diagram. Beamforming is similar, but instead, also the form of the pattern can be designed as well. The work will focus on the beam steering and beamforming algorithms obtained from an input reference angle from the satellite attitude determination and control system (ADCS) to a desired target angle. Reference signals to digital phase shifters will be considered. The algorithms will be developed in high-level language for design and analysis, where implementation will be carried in a low end platform such as the Arduino or the Raspberry Pi. The student will have the opportunity to work hands-on with our NAN lab facilities, nanosatellite equipment and collaborate on current research in satellite projects. PrerequisitesProgramming in a high-level (e.g. Python) or/and low-level (C/C++), Linux, embedded programming. ContactsRune Hylsberg Jacobsen (rhj@eng.au.dk)

(image sources: Wikipedia (top), Adaptive beam steering (bottom)) |